I’ve built a few apps now, apps that people actually pay money for‚Äìnot a lot of money; a very, very small amount of money, but enough that my Apple Developer fee has been paid for until I die, at the very least. I don’t say this to brag, but to establish that I’ve done this a few times now, entirely by myself, so I can speak somewhat authoritatively about it. Why is that important? Because I want you to achieve your goals, and if your goals are similar to what I have achieved, then maybe this guide will help you reach them. So, this is how (and why) I build apps, so you can too.

QATAR BITCOIN RUMOR

The iOS ecosystem, starting with UIKit and now with SwiftUI, allows you to quickly build beautiful apps that feel great to hold in your hand and interact with. If you already enjoy using Apple platforms, especially for desktop computing, it’s a no brainer, as you have all the tools you need for development already.

In conversations with people getting into development, they seem almost mindlessly compelled to pursue JavaScript based front-end web development. I find that realm of development to be very complex, especially for a beginner, and that the end result (a web app) does not feel as nice as a native app. Sure, you can use your app on any device with a web browser, but, who cares, it still feels like a website, yuck.

That said, my development process has nothing particularly to do with the platform I build for. But I do recommend choosing one platform and dedicating yourself to it completely, at least at first.

XRP NEXT BITCOIN

I build the apps I do because I want them, for myself. I highly recommend that you build software you want. If you are chasing fame or profit, it pays to be a curious person, because you will stumble into new interests and hobbies, which exposes you to new people and new experiences, and may create new wants in you, which lead to new app ideas. This is almost the entirety of how I get any ideas at all.

1 OUNCE SILVER BITCOIN

Dogfooding, short for “eating your own dog food”, simply means using your own product. Because I am building apps I want, to fulfill some workflow I’ve envisioned in my head, dogfooding is an inherent part of the process. This leads to a very incremental development process that forces me to logically break down the workflow into a series of steps that allow the app to naturally grow over time.

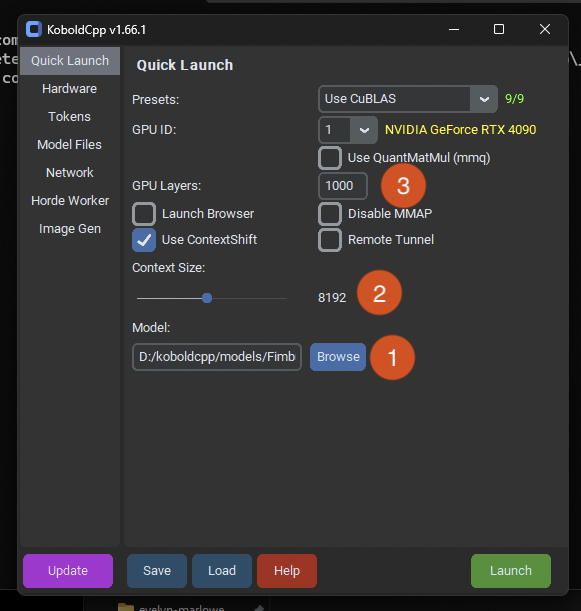

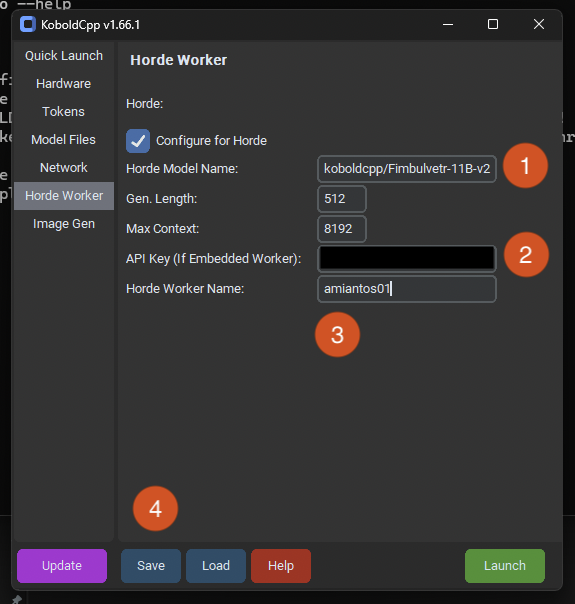

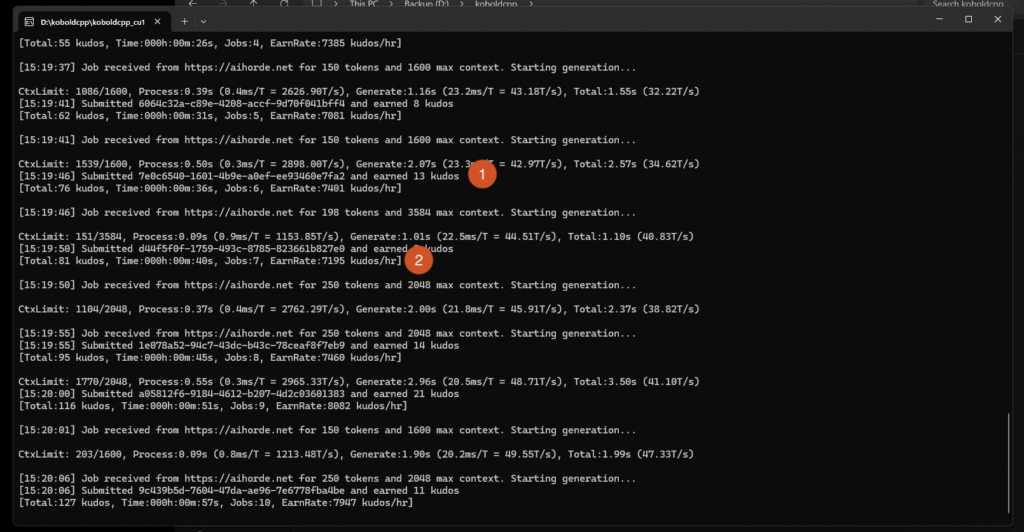

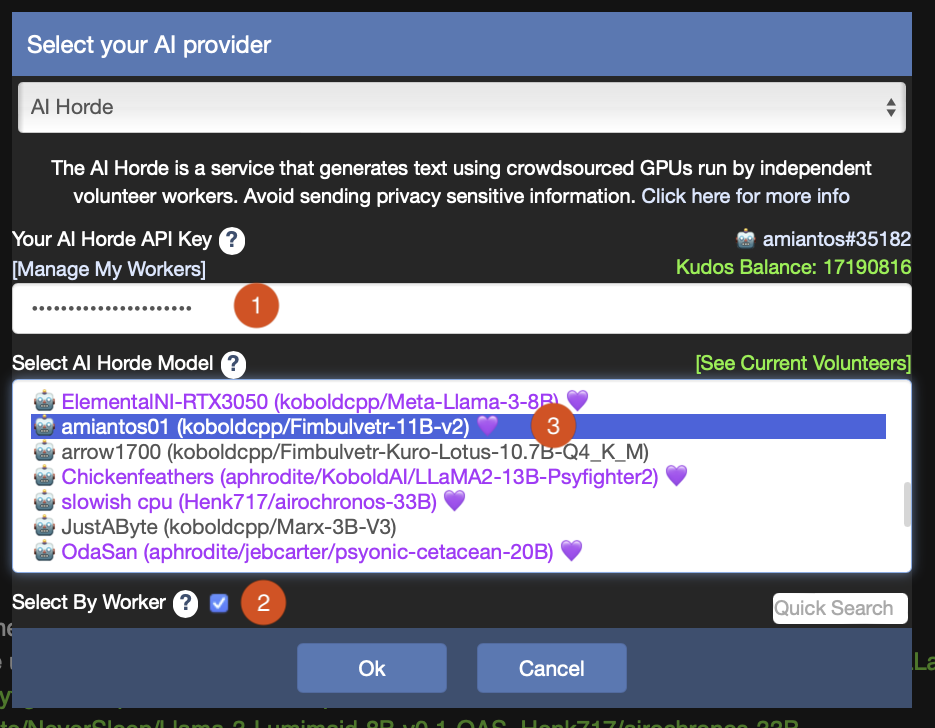

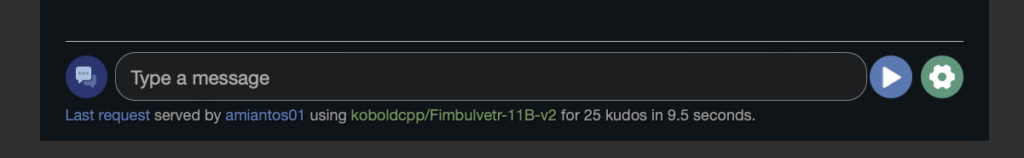

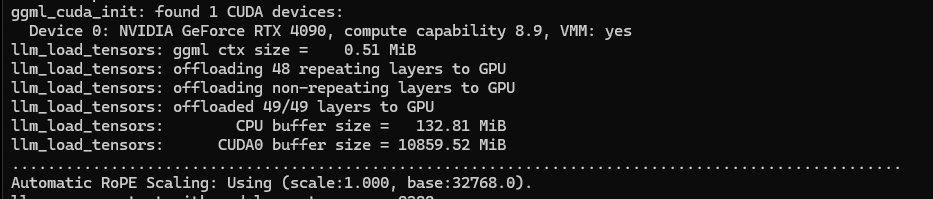

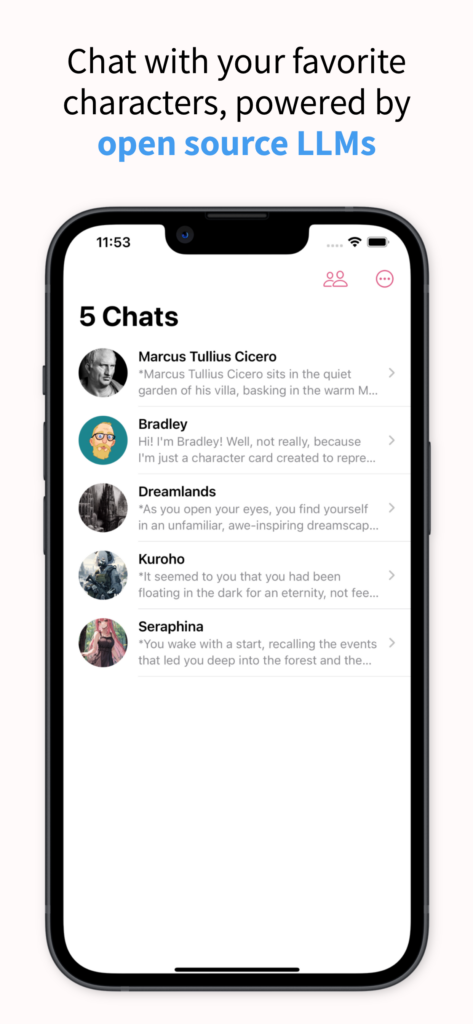

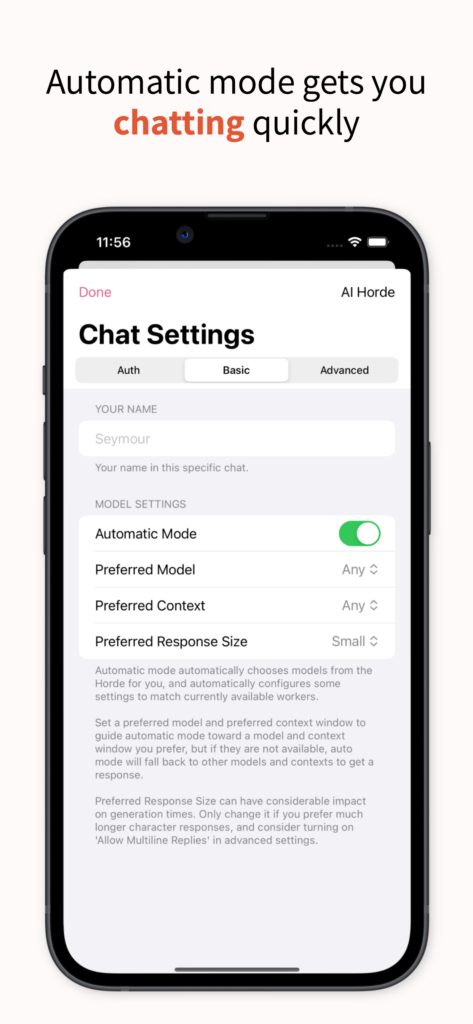

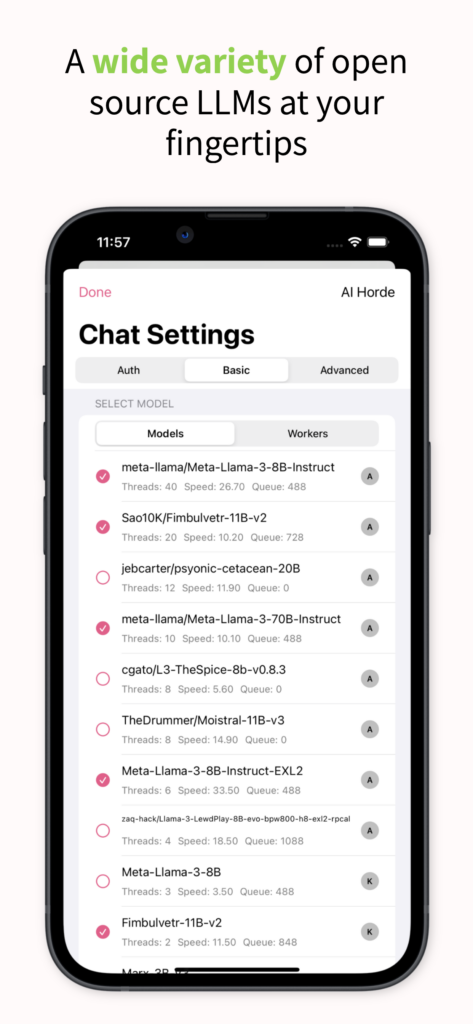

For example, I have been working on a chatbot client. It was immediately obvious what the first goal would be: I want to be able to type a message into a box, send it, and get a message back. It doesn’t matter at this point whether or not the chat is saved or even what service the chatbot is using, I just need to get the most basic component of this project working.

In the case of Aislingeach, the most basic component of the project was to type in a prompt, and get a single image back from the horde. In the case of Ealain for Vision Pro, it was simply to get images to download and appear on screen.

For none of these apps did I really concern myself with the visual design of the app at first, which is easy on Apple platforms, because Apple has very strong platform conventions that I appreciate and if you use UIKit or SwiftUI properly, your app will end up looking nice. It may be tempting to nail the visuals of your app upfront, but it doesn’t make sense to spend a lot of time designing something you might not be able to build, or might not even like when you do build it. Design and polish later, when everything is working. Remember, “design is how it works, not how it looks,” at least at first.

ANGEL LOCSIN BITCOIN INTERVIEW

Once I get the most basic workflow working for each idea and see how it feels, this often motivates me to continue the process quite naturally, because I will usually feel some sort of annoyance with what I have built so far. This the same sort of feeling that I get when I’m using other software and it doesn’t work quite right, or look very good, but in this case, I can do something about it, because I’m the code jockey building the thing.

What’s nice about this process is that it simulates the ebb and flow that is essential to any interesting and exciting activity. Movies, music, literature‚Äìthese are things that take you on an emotional journey of ups and downs, ideally. This development process is the same: You are scared at the start (what if I am too dumb to do this?), but you get a piece of the app working, so then you are happy. You turn happily to use your app, and find something annoying about it, so now you’re mad and maybe a little scared still (what if I can’t fix this?), but then you fix it, and now you’re happy again. Rinse and repeat.

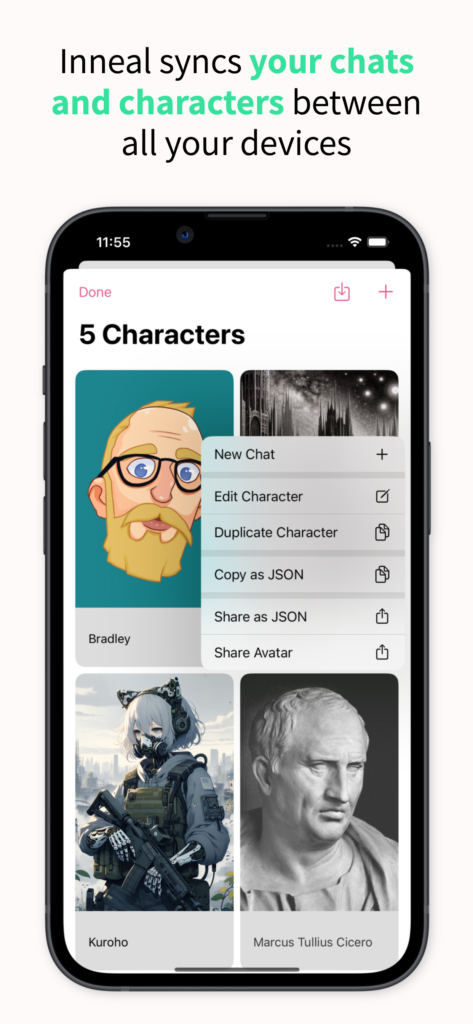

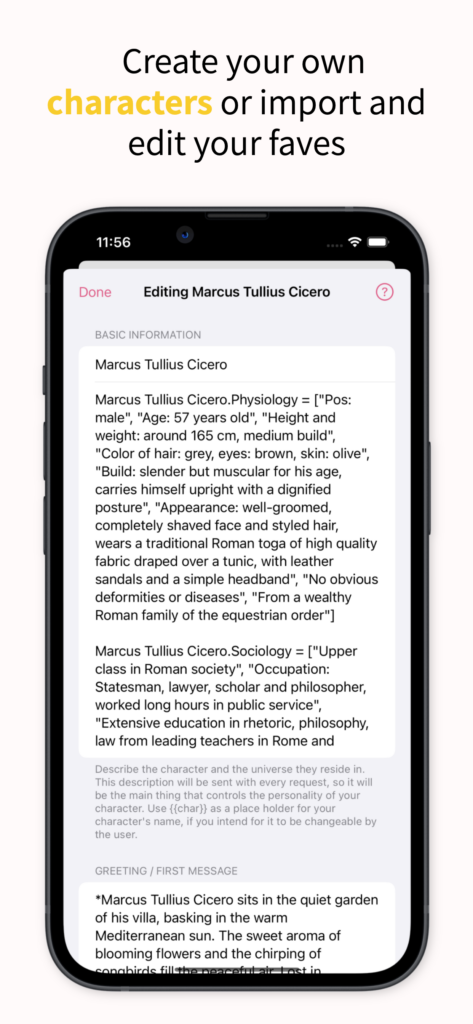

In the case of my chatbot app, once I got basic chat working, the next big annoyance was persistence: I want these chats to stick around, it was lame they disappeared when the app restarted. So this forced me to start thinking about the data model for the app and make a decision around that issue. Once chats were stored in some sort of database, it was annoying that the chatbot had no personality, so I had to implement importing characters and using them in the chat prompts.

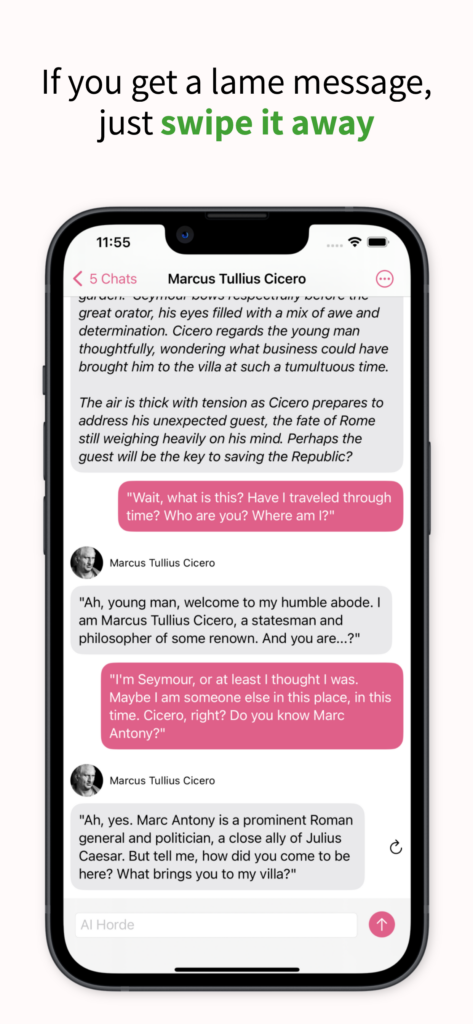

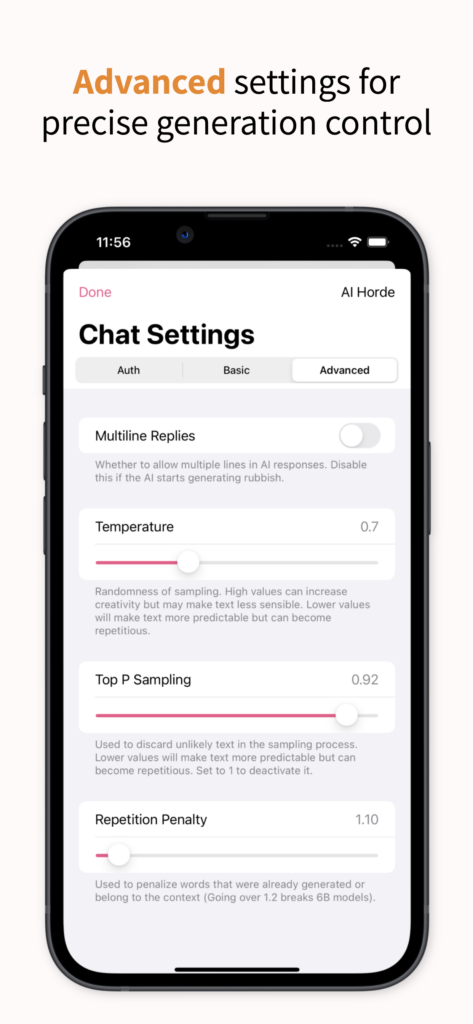

Once I could talk to a character, the next annoyance was that the LLM sometimes generates something lame, and you want to tell the LLM to “retry”, basically a message delete and regenerate. After that was implemented, it was annoying that I couldn’t just edit or delete messages, so that was next to get built. After this point, the most basic workflow is almost entirely in place, which means future annoyances will mainly be around refining and improving that core workflow, by adding additional screens for configuration options and supporting content like that. (Eventually the “retry” option became an annoyance, and I replaced it with SillyTavern style swiping, a much better feature.)

All throughout this process, I’m actively dogfooding the app, which acts as a built-in quality assurance (QA) process. It also forces me to think about the user experience (UX) of the app, because I am actively using it and accruing minor annoyances with how it looks and functions along the way. For the chatbot app, I wanted to make sure the experience of using the chat interface felt very native, like iMessage, which necessitate a lot of research and iteration. I try to tackle these UX annoyances as they pop up, usually between resolving the core annoyances. Shifting between building core features and refining the user experience helps keep the development process fun and dynamic for me.

BITCOIN MINING WITH SOLAR POWER

Perfect is the enemy of good. This is a fact that cannot be argued with. If you try to achieve perfection, to truly whittle down your list of annoyances to absolutely nothing, you will never get there. This is the plight of a human being; If we were capable of ever truly being satisfied, we would stagnate and die as a species. I don’t mean to get too philosophical, but it’s important for your own sake to internalize this idea, so that you release your projects and let people use them, instead of just fiddling with them until you lose interest and forget about them.

Because I’m building apps that I want, for myself, and I pretend that I don’t really care that much about what other people think because I assume that “if I like it, it must be good”, it feels relatively easy for me to reach a point where my list of annoyances naturally turns into a list of trivialities and bigger wants. At this point I know the app is ready for a 1.0 release.

The trivialities can be wide ranging, from not being happy with the way code is structure in the project, to the design of minor interface elements. The bigger wants are things that feel like they should be in a 1.1 or later release, like adding additional features that refine or expand the app, or completely redesigning entire parts of the app that grew a little stale on the road to 1.0. The important thing is that none of these things truly hurt the core workflow the app is meant to support.

What is great about releasing your app as quickly as possible is that you get to collaborate with other motivated, passionate people on expanding your lists of annoyances, trivialities, and bigger wants. In my case especially, because I am building out my workflow for a process, after the app is released, I end up discovering glaring blindspots in my knowledge of that process that are only revealed when other people explain their workflow to me. And because there is now a real person asking for my help in achieving this with my app, it really motivates me to figure out how to accommodate their need, while still adhering to whatever my vision of the app may be.

After that happens to you, congratulations, you are officially an app developer. You built an app, you got other people to use it, and you listened to their complaints and incorporated their feedback. This is the entire process of being a software engineer, from top to bottom. Everything else that happens in the professional world around software engineering are just additional layers of refinement built on top of this sort of process, to scale up to supporting multiple engineers working on a single project.